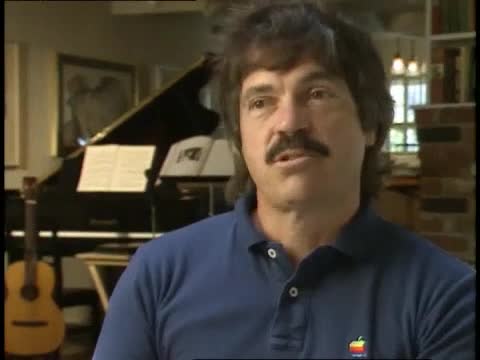

The Machine That Changed the World; Interview with Alan Kay, 1990

- Transcript

Interviewer: ALAN, WHAT DO YOU THINK ABOUT THE FIRST COMPUTERS WERE LARGE EXPENSIVE MACHINES THAT HAD BEEN BUILT TO DO CALCULATIONS. HOW DID PEOPLE THINK ABOUT THIS NEW TECHNOLOGY? Kay: Well, I think that some people thought they were never going to get any smaller. There were calculations about how many Empire State buildings you needed full of vacuum tubes to do this and that. And other people at Bell Labs who had been working on the transistor had a sense that this could be used as a switch, cause AT&T wanted something to replace vacuum tubes. And so I think there are several perceptions of how big the thing was going to be. I think it wasn't until the sixties that people started thinking that computers could get quite small. Interviewer: PART OF THE PROBLEM WAS THE SIZE, THE OTHER PROBLEM WAS JUST WHAT IT WAS SUPPOSED TO BE FOR.... Kay: Yeah I think that when they were doing ballistic calculations there was this notion that you would run a program for a very long time. It was really after UNIVAC in the 1950s that people started thinking, "Oh, there, might have to do some programming." And people, most notably, Grace Hopper, very early on started thinking about higher level programming languages. So that was sort of one, one flow. And I think the other thing was the urgency of the Cold War got people thinking of air defense. And so scientists at MIT started working on systems that could assess radar and show it to you on a display. And those are the first computers that had displays and pointing devices. Interviewer: FOR THE MOST PART THOUGH THOSE WERE KIND OF EXCEPTIONS, CAN YOU DESCRIBE WHAT THE BATCH PROCESSING EXPERIENCE WAS LIKE, WHAT IT WOULD HAVE BEEN LIKE?

Kay: Well, I always thought of it as something like a factory. You have this very large thing and there are raw ingredients going in the front and there are some very complex processes that have to be coordinated, then sometime later you get what you were hoping for on the out of the rear end. And you can think of them also as like a railroad, somebody else is deciding the schedules. I can certainly remember having maybe one run a day, or two runs a day, and you could only get your results then, and you had to work around it and so forth. And so it was very much of an institutional way of thinking about computing. Interviewer: DID THIS PUT MANY PEOPLE OFF COMPUTING? Kay: I don't think so. I think you, you know the happiness is the how much expectations you know, the reality exceeds expectations. I think that most people were happy, you know in the '50s there were still in business predominantly business computing was done with punch card machines. And IBM was the company that made those punch card machines. So there was an enormous disparity between what you could do with computers and what most people did. Interviewer: BUT STILL FOR MOST PEOPLE THE PHYSICAL SIZE AND APPEARANCE OF THE MACHINE WAS A COMPELLING THING AND THAT GAVE IT THIS MYTHOLOGY?

Kay: Well, I think, yeah my feeling is, is that anything that is larger human scale invokes mechanisms concerned with religion so you have a priesthood with white coats, you know all the paraphernalia were there and some people thought it would take over the world and some people wanted it to take over the world, and some people were afraid it would take over the world, and none of those things happened. But that was what it was when it was this large thing and, and towards the late '50s many people in different places started saying, "Well, this thing has the potential of being more like a partner maybe a complementary partner. It can do some things that we can't do well and vice versa, so we should find some way of bringing it down to human scale. Interviewer: THE COMPLEMENT TO THE INDIVIDUAL? Kay: The partner to people, not so much directly connected to the institutions. Although of course, the first way it was done using time-sharing the mainframe was still owned by the institution. Interviewer: OKAY NOW, OF THE PEOPLE, OF THESE SORT OF VERY EARLY VISIONARIES I MEAN YOU YOU'VE DIVIDED THEM INTO SORT OF TWO GROUPS, HAVEN'T YOU?

Kay: Yeah. Interviewer: COULD YOU TALK A BIT ABOUT THAT? Kay: Which two groups? Give me a cue. Interviewer: WELL, ONE GROUP WAS THE ONES WHO WERE INTERESTED IN AMPLIFYING INTELLIGENCE, SORT OF... Kay: You know there are lots of lots of different groups... Okay, must be okay because we're still rolling, right? So, well I think in the in the late '50s there were a variety of different conceptions of what you should do with it. Some of those conceptions were had by the very same person. So for instance, John McCarthy, who is a professor at MIT both wrote memos suggesting we should time-share the computer, and he also thought more into the future that we'd be all networked together, and there would be these huge information utilities it would be like our power and lighting utilities that would give us the entire wealth of the knowledge of man, and he suggested on that what we'd have to have is something like an intelligent agent. An entity not maybe as smart as us, but an expert in finding things, and we would have to give it advice. He called it the "advice-taker." Interviewer: TELL ME ABOUT TIME-SHARING BECAUSE OBVIOUSLY ONE THING PEOPLE WOULD SAY IS, "HOW COULD EVERYBODY HAVE THEIR OWN COMPUTER?"

Kay: Well, I think that was it. They cost several million dollars back then and people had been fooling around with things little bit like time-sharing before sort of the official invention of it at MIT. And part of it was just to debug programs. The idea is that debugging is one of the most painful processes. Incidentally you probably know that the there was actually a bug. The first bug was one found by it was a moth, I think found by Grace Hopper. So it actually has an official, and a friend of mine, just recently was having trouble with his laser printer. And nothing that they thought of could possibly work, and, and finally somebody decided to open it up and look in it, and what it was, was a mouse. The mouse had moved in, it was nice and warm. It had, on top of the circuit board had set up various, various places you know so it was like a real...and course nobody understood what it meant when it said that this guy's computer has a mouse problem. Cause mice don't have problems. So, you know. So it's an ill wind that blows nobody good. Interviewer: TELL ME ABOUT THE BASIC CONCEPT OF TIME-SHARING. WHAT WAS THE IDEA?

Kay: Well, time, the idea was that when humans use computers the best way of having them use it, is instead of using all the power for five minutes a day, is to take those five minutes of computer power and spread it out over several hours. Cause people are slow at typing, and when you're debugging you don't need to do long runs of things. And what you want is lots of interaction, lots of finding out that you're wrong and this is wrong, you can make a little change and this is wrong. So you want to go from something that requires enormous planning to something that is much more incremental. And this is the urge that the people who developed time-sharing had. Interviewer: BUT HOW COULD YOU GIVE AN INDIVIDUAL ACCESS EXCLUSIVE ACCESS TO A COMPUTER? Kay: Well, you couldn't. So the idea is that if the thing cost several million dollars as McCarthy pointed out one of the things that you could do is roll in one person's job and do it for a couple of seconds, then roll in another person's job and do it for a couple of seconds. And if you had a fast enough disc for holding all of these jobs then you would be able to handle twenty, thirty or more at once and they wouldn't feel that the lag of a few seconds was going to hurt them. Interviewer: SO THE COMPUTER IS SWITCHING BETWEEN THE JOBS SO FAST, IS THAT THE WAY IT WORKS?

Kay: Right, right. Well, that's the way it's supposed to work. And of course, the thing that drove personal computing in the '60s into existence was that it proved to be extremely difficult to get reliable response time. Interviewer: BUT THIS IS LIKE ONE OF THE EARLY IDEAS OF THE USER HAVING AN ILLUSION. Kay: Yes, indeed. Right, in fact the best one of these was a system called JOSS at RAND Corporation in which the system was devoted entirely to running this single language. That made things much better. The language was designed for end users. It was the first thing that effected people the way spreadsheets do today. It was designed -- it had eight users on this 1950s machine, but the guy who did it, Cliff Shaw, was a real artist, and the feeling of using this system was unlike that of using anything else on a computer. And people who used that system thought of wanting all of their computing to have the same kind of aesthetic, warm feeling that you had when using JOSS. Interviewer: IF YOU HAD TO LIST -- IF YOU WERE, I MEAN A VERY CLEAR THINKING PERSON BACK THEN IN THE LATE '50S, YOU KNEW EVERYTHING THEY WANTED COMPUTING TO BE, WHAT WOULD THEY HAVE, SMALL, INTERACTIVE, REALTIME...?

Kay: Well, I think in the '50s the emphasis, you know these things go in waves, the emphasis in the '50s was first on being able to do things incrementally, second, people wanted to share, so the idea of electronic mail was very early at MIT, and at some point people started thinking that the form of inaction started to have a lot to do with how good you felt, and how puzzled you were and so forth. The invention of computer graphics, modern computer graphics, by Ivan Sutherland in 1962 had a lot to do with people's perceptions. Cause once you saw that you couldn't go back. It established a whole new way of thinking about computer interaction, and to this day it has remained an inspiration. Interviewer: TELL ME ABOUT THAT PIECE OF WORK. HE WAS A GRADUATE STUDENT, WASN'T HE?

Kay: Yes, he had gone to Carnegie Tech, now Carnegie Mellon, and came to MIT to do his Ph.D. work. One of his advisors was Claude Shannon, and another one was Marvin Minsky. And as he has told the story several times, is that they were then in just the early stages of Jack Licklider's dream to have the computer be a sort of a symbiotic partner. And when he went out to Lincoln Labs people were starting about that, and there was a marvelous machine there called the TX-2. This was one of the last computers in the US large enough to have its own roof, you know, it was one of these enormous machines originally built for the air defense system. And Ivan made friends with people there and started thinking about something having to do with computer graphics. This you know, the air defense system used displays for putting up radar plots and so forth. And light guns were already around and so forth. And so he started thinking about maybe doing a drafting system, and as I recall it one of his original reactions when seeing the kind of graphics you could put on a screen -- because the screens couldn't even draw lines. When they put up a line it was put up with lots of individual dots. And done fairly slowly, so it would flicker and it was pretty awful looking. And Ivan at that point said the best words a computer scientist can ever say, which is, "What else can it do?" And so he got, in fact having the display not be great helped what he tried to do on it, because he started thinking of what was the actual power and value of the computer. Now today we have a problem because the displays are good so our tendency is to simulate paper. But what Ivan started thinking about, is what could the computer, what kind of value could the computer bring to the transaction so it would be even worth while to sit down and use such an awful looking display. And the thing he came out with was that the computer could help complete the drawings, so you could sketch -- this is where the idea of Sketchpad -- you could sketch something in. You could sketch in, if you were trying to make a flange, well you just put in the lines for the flange, and then you would tell Sketchpad to make all of these angles right angles. And you could make these two things proportional to each other and so forth. And Sketchpad would solve the problem and straighten out the drawing in front of your eyes into something that was more like what you wanted. So that was a terrific idea. And then he took it another step further, because he realized he could solve real world problems. So you put a bridge into Sketchpad it'd never been told about bridges before but you could put bridges in and tell Sketchpad about pulling and pushing of things and hang a weight on the bridge and Sketchpad would, would generate the stresses and strains on the bridge. So it was now acting as a simulation. You put in an electric circuit. Sketchpad had never heard about electric circuits before, but you could put in Ohm's law, and what batteries do, and it would in order to settle the constraints -- or one of the nicest things was you could put in mechanical linkages. So you could do something like a reciprocating arm like on a locomotive wheel; for going from reciprocating to circular motion. And Sketchpad's problem solver if it had a problem that it couldn't get an exact solution for, there wasn't just one solution, and of course there isn't for this. What it would do is iterate through all the solutions, so it would actually animate this thing and right on the screen you would see this thing animating, it was the very thing that you were thinking of. Interviewer: AND THIS IS VERY EARLY, IT SOUNDS VERY MODERN.

Kay: '62, yeah. Well, you can't buy a system today that does all the things that Sketchpad could back then. That's what's really amazing. It had, the first system that had a window, first system that had icons. Certainly the first system to do all of its interaction through the through the display itself. And for a small number of people in this community, the Advance Research Projects Agency research community, this system was like seeing a glimpse of heaven. Because it had all of the kinds of things that the computer seemed to promise in the '50s. And practically everything that was done in the '60s in that community, and into the '70s, had this enormous shadow cast by Sketchpad, or you could maybe think of it better as a light that was sort of showing us the way. Interviewer: AND THAT'S ONE GLIMPSE OF HEAVEN, ANOTHER GLIMPSE OF HEAVEN I SUPPOSE IS THE WORK OF DOUG ENGELBART.

Kay: Yes, and he was also funded by the Advance Research Projects Agency and his original proposal I think was also 1962. 1962 is one of those amazing years. And Engelbart had read about the Memex device that Vannevar Bush, who was President Roosevelt's science advisor and a former professor at MIT, he had written an article in 1945 called "As We May Think." And most of the article was devoted to predictions of the future and one of them was, he said that sometime in the future we'll have in our home a thing like a desk and inside the desk on optical storage will be the contents of a small town library, like 5,000 books. There'll be multiple screens, there'll be pointing devices, and ways of getting information in, and pointing to things that are there and he said that you can form trails that will connect one piece of information to another. He invented a profession called "Pathfinding" that there'd be people called Pathfinders who sold paths. You could buy a path that would connect some set of interesting things to some other set of interesting things. And so this is a complete vision in 1945. And a lot of people read that. I read it in the '50s when I was a teenager because I had seen it referred to in a science fiction story. Engelbart had read it fairly early, when he was in military service. And once you read that thing you couldn't get it out of your mind, because the thing that anybody who deals with knowledge would desperately like to have. And so Engelbart in the early '60s started writing proposals, and he finally got ARPA interested in funding it and they started building a proposal of his. And a couple of years later, 1964, he invented the mouse -- to have both a better pointing device than the light pen, and a cheaper one. And they built a system that by 1968 was able to give a very large scale demonstration, to 3,000 people in San Francisco. Interviewer: AND YOU WERE THERE.

Kay: I was there. Interviewer: TELL ME ABOUT IT. Kay: Well I had, I had seen the system beforehand, because of course I was a graduate student in this community. But still even having seen the system, the scale of the demo and the impact it had, it was unbelievable. I remember it started off, there was about 3,000 people in this auditorium. It was at one of the, I was the Fall Joint Computer Conference, I think and all you could see on the stage was this figure with something in his lap and a box in front of him and a couple of things that looked like TV cameras around him. And he had on a headset and he started talking he said, "I'd like to welcome you to our demonstration." All of a sudden his face appeared 20 x 30 ft wide on this enormous screen, because they borrowed one of the NASA... one of the first one of the first video projectors. On this, and they used this huge situation display, and then they, they used video editing so you could see while he was giving this demonstration what he was doing with his hands, with the mouse on the key set, what was going on, on the screen and so forth. And that, that is video taped, I mean that is something that you can use for your-- Interviewer: YOU WERE TALKING ABOUT DOUG, WHAT DID HE DEMONSTRATE, WHAT SORT OF THINGS DID HE SHOW YOU?

Kay: Douglas, Douglas Engelbart he started off just showing us how you could point to things on the screen and indicate them and started off actually fairly, fairly simply just showing how you could look at information in various ways. He showed something very much like HyperCard -- so he had a little map of how he was going to go home that night, how he was going to go to the library and the grocery store and the drug store and so forth. You could click on each one of those things and it would pop up and show him what he had to get there. And what he demonstrated were the early stages of what we call hypertext today. Lots of response in the system. One of the big shockers was midway through the thing he started doing some collaborative work, and all of a sudden you saw the, in an insert on the screen, you saw the picture of Bill Paxton who was 45 miles away down in Menlo Park, live. Both of them had their mouse pointers on the screen and they were actually doing the kinds of things that people still dream about today. So this was a complete vision. And I think of it as the vision of what we today call personal computing or desktop computing. Except for the fact that the processor was a big time-shared computer, all of the paraphernalia -- Engelbart used a black and white display, 19 inch display, using video to blow up a calligraphic screen and have a mouse. If you looked at the thing today, you'd see something that looked like somebody's office that you could walk into. And what remained was to do something about the the problems in response time and, and all that stuff. And that was something that I had gotten interested in a few years before. And the first machine that I did was a thing called the FLEX Machine and... Interviewer: JUST BEFORE WE GO INTO FLEX, TELL ME WHAT WAS THE REACTION LIKE AMONG THE COMPUTER COMMUNITY?

Kay: Well, I didn't take a, in fact, I was actual as I recall I actually would rather, I had the flu or something, but I was determined to go see this thing. ARPA had spent something like $175,000 on this demo and everybody in the ARPA community wanted to show up. I recall that the, the crowd, you know he got a standing ovation and he won the best paper in the Fall Joint Computing Conference and so forth. And what was even better is that he had bought up four or five terminals to the system and had them in a room and people could go in and actually learn to interact with the system a bit. So it was a large scale demonstration. I don't think that anybody has ever traced what people did in, over the next 15 or 20 years as a result of having been at that demonstration, that would be interesting. Interviewer: DOUG THOUGHT IT, HOPED IT WOULD CHANGE THE FACE OF COMPUTING AND IS VERY DISAPPOINTED THAT IT DIDN'T. HE THINKS IT DIDN'T REALLY HAVE MUCH IMPACT. Kay: Well, I mean there are a couple of things that Doug, I mean we thought of Doug as Moses opening the Red Sea. You know he was like a biblical prophet. And like a biblical prophet he believed very much in his own particular vision. And that vision was not a one, a 100 percent good idea. One of the things that they neglected completely was the learnability of the system. People who used the system were all computer experts who loved it, were willing to memorize hundreds of commands in order to be... If you memorized hundreds of commands and you learned how to use the key set you could fly through this n-dimensional space. It was quite exhilarating to watch people doing this and exhilarating to learn how to do it. The problem with it though was that there were so many barriers towards learning. And there were many other, many other things that were problems. It will, it wasn't a particularly good simulation of paper, partly because he didn't want it to be. And so the idea that there would be a transition period where you would be producing documents, of course, they printed documents out, but there was no notion of desktop publishing there. The whole system was rather like a violin. And if you were willing to learn how to become a violinist you could play incredible music through the thing. And of course all of us were so completely sold on this system in the in the late '60s. And the first machine that I, that I did, the FLEX Machine, was an echo of this.

Interviewer: NOW THE FLEX MACHINE. THE OTHER ELEMENT WHICH IS IN THIS, APART FROM THESE FANTASTIC SOFTWARE ACHIEVEMENTS OF SUTHERLAND AND ENGELBART IS OF COURSE THE SIZE OF THE MACHINE. THERE WERE SOME PRECEDENTS, WEREN'T THERE?

Kay: Oh yes, the first personal computer in my opinion, was the machine called the LINC. If you include size as one of the important things. Of course, you could say that the Whirlwind was a personal computer at MIT, or the TX-2. Some people tried to get Ivan Sutherland to write a paper called "When There Was Only One Personal Computer." And that was him using Sketchpad on the TX-2, which is this thing bigger than a house. But in 1962 Wes Clark did a machine in which part of the design parameter was it was small enough to look over when you're sitting down at a desk, you know. So, it was not supposed to loom over you, it was something you could actually see over it. And many important things were done on that machine. In fact quite, quite a few hundred, if not a few thousand of them were built and used in the biomedical community. It was designed for biomedical research, designed to be programmed by its who were not computer scientists, even designed to be built by non-computer scientists. They used to have gatherings in the summertime where 30 people or so would come and build their own LINCs and then take them back and stuff. It was great little machine. It had a little display and display editors and, and so forth. And so it was something that you could point to when you were trying to think of what to do. And there were other small things. There was a machine called the IBM 1130, which was really an abortion of a machine. It was sort of a keypunch keyboard hooked to a, one of the early removable disc packs. I mean this was a mess of a machine, but it was the size of a desk and you could sit down. It wasn't, it wasn't designed really to be programmed except by storing programs on cartridge, very funny, you could only store data on the disc. IBM forced you to put the programs on punchcards and that was the only way you could feed them in, it was really hilarious. So there were lots different kinds of things like that. Interviewer: WHAT WERE YOU TRYING TO DO WITH FLEX?

Kay: Well, I worked on this machine with a guy by the name of Ed Cheadle who was, he was trying to do, really trying to invent what today we would call personal computing. And he had a little machine and he had a little Sony television set, and what he wanted was something for engineers that would allow them to -- he was an engineer -- and he wanted something that allow them to flexibly do calculations beyond the kinds of things that you do with a calculator. So you should be able to program the machine in something. You should be able to store the programs away. You should be able to get it to do things. And then I sort of came and corrupted the design by wanting it to be for people other than engineers. I'd seen JOSS, and I'd also recently seen one of the first object-oriented programming languages, and I realized how important that could be. And then Cheadle and I escalated -- the problem is that he and I got along really well, and so we escalated this design beyond the means of the time to build it practically. But we did build one, and it had many things that people associate today, it fit on top of a desk -- special desks, because it weighed hundred of pounds. But it, it had a fan likened to a 747 taking off because the integrated circuits back then had maybe 8 or 16 gates on a chip. So this thing had about 700 chips in it. It had a high-resolution, calligraphic display. It had a tablet on it and it had a user interface that included multiple windows, things like icons and stuff. But it was, it was rather like trying to assemble a meal, maybe make an apple pie from random things that you find in kitchen -- like no flour, so you grind up Cheerios, you know? You wind up with this thing that looks sort of like an apple pie, but actually it isn't very palatable. So the result of this, this, this machine was a technological success and a sociological disaster. And it was the, the magnitude of the rejection by non-computer people we tried it on, that got me thinking about user interface for the first time. And I realized that what Cliff Shaw had done in JOSS was not a luxury, but a necessity. And so it, it led to other, other ways of looking at things. Interviewer: SO IF WE GO BACK TO ORIGINALLY YOU WERE SAYING PEOPLE THOUGHT OF MAINFRAMES AS LIKE FACTORIES, RIGHT? ...THESE EARLY ATTEMPTS AT PERSONAL COMPUTERS, ARE WHAT, LIKE MODEL T....?

Kay: Yeah, I think Engelbart -- I think one of the ways that, that we commonly thought about Engelbart's stuff was he was trying to be Henry Ford. That the, you could think of the computer as a railroad and the liberating thing for a railroad is the personal automobile. And Engelbart thought of what you were doing on his system as traveling, so you're moving around from link to link in the, in the hyperspace and he used terms like "thought vectors" and "concept space" and stuff. Nobody knew what it meant, not sure he did either but it, it's, it was that kind of a, that kind of a metaphor. Interviewer: WHAT'S WRONG WITH THAT METAPHOR, ULTIMATELY? Kay: Well I don't think there is anything particularly wrong with it but it's it, when you're doing things by analogy, you always want to pick the right analogy because there's so many ways of making them. And, the thing that was limiting about it when you apply it to humanity, as an example, is a car you expect to take a few months to learn how to do it, that was certainly true. It's something that doesn't extend into the world of the child. There's a whole bunch of things, but of course we didn't think of it that way. We thought of the car as one of the great things of the 20th century and it changed our society and everything. So, we were, we were definitely, definitely using that as a, as a metaphor. And, in fact the thing that changed my mind had nothing to do with rejecting the car as a metaphor, it was finding a better metaphor that, one that was completely possessed me. And that came about from seeing a, quite a different system. I had called the FLEX machine a personal computer. I think that was the first use of that term. While I was trying to figure out what was wrong with it I happened to visit RAND Corporation over here in Santa Monica and saw sort of a follow-on system to JOSS that they had done for their end users who were people like RAND economists. These people loved JOSS but they hated to type. And so, in the same year the mouse was invented, the RAND people had invented the first really good tablet. It was a high-resolution thing and they decided that the thing to do was to get rid of keyboards entirely, and so the first really good hand character recognizer was developed there. And they built an entire system out of it called GRAIL, for GRAphical Input Language. So there's no keyboard at all. You interacted directly with the things on the screen. You could move them around. If you did a, a square on the machine, if you drew a square, it recognized you were trying to draw a square, it would make one. If you put in your hand printed characters it would recognize them and straighten them up and the system was designed for building simulations of the kinds that, that economists and other scientists would like to build. And using this system was completely different from using the Engelbart system. And this system, it felt like you were sinking your fingers right through the glass of the display and touching the information structures directly inside. And if what Engelbart was doing was the dawn of personal computing, what the RAND people were doing was the dawn of intimate computing. Interviewer: AND IN INTIMATE COMPUTING YOU FORGET THAT IT'S A MACHINE AND YOU THINK OF IT MORE AS A MEDIUM.

Kay: Yeah, you start...and, one of the things that completely took hold of me in using the GRAIL system was it felt more like a musical instrument than anything because a musical instrument is something that -- most musicians don't think of their instruments as machines. And it's that closeness of contact, the fitness that you're directly dealing with content more than the form of the content that, that possessed me very strongly. And I saw, that was in 1968 as well, and I saw another several things. I saw Seymour Papert's early work with LOGO for, here were children writing programs and that happened because they had taken great care to try and combine the power of the computer with an easy to use language. In fact they used the RAND JOSS as a model and used the power of LISP which had been developed a few years before as a an artificial intelligence language, put them together and that was the early LOGO. And to see children, confidently programming, just blew out the whole notion of the automobile metaphor. And the thing that replaced it was that this is a medium. This is like pencil and paper. We can't wait until the kids are seniors in high school to give them driver's ed on the thing, they have to start using it practically from birth the way we, they use pencil and paper. And it was destined not to be packaged on the desktop, because we don't carry our desks with it- It had to be something much smaller. And that was when I first started seriously thinking about a notebook computer. And of course the, the first thing I, I wanted to know after deciding that it had to be no larger than this was when would that be possible, if ever? And, so I started looking at what the integrated circuit people were doing, Gordon Moore and Bob Noyce and stuff and there were these nice projections that they, as confident physicists had made, about where silicon can go. And what's, what's wonderful is, these projections have only been off by a few percent now more than 20 years later. And those... and of course I was very enthusiastic, I would believe anything that was in the right direction. So I took this hook, line, and sinker and said, - okay, 20 years from now, we'll be able to have notebook size computer that we can not only do all the wonderful things on computers, we can do mundane things to, because that's what paper is all about. You can write a Shakespearean sonnet on it, but you can also put your grocery list on it. So one of the questions I asked back in 1968 is, - what kind of a computer would it have it to be for you to do something so mundane as to put your grocery list on it, be willing to carry it into a supermarket and be willing to carry it out with a couple of bags of groceries? There is nothing special about that. You can do it with paper. So the, the question is -- see the question is, not whether you replace paper or not. The question is whether you can cover the old medium with the new. And then you have all these marvelous things that you can do with the new medium that you can't do with the old. Interviewer: MANY PEOPLE FIND THE IDEA OF A MEDIUM A TRICKY CONCEPT. IN THE SENSE YOU WERE TALKING ABOUT WRITING AND SO FORTH OR MUSIC, WHAT DO YOU MEAN?

Kay: Well, I think most of my thoughts about media were shaped by reading McLuhan which not everybody agrees with but one of his points is that the notion of intermediary is something that is not added on to humans. It's sort of what we are. We deal with the world through intermediaries. We deal with our, we can't fit the world into our brain. We don't have a one to one representation of the world in our brain. We have something that is an abstraction from it and so we, the very representations that our mentality uses to deal with the world is an intermediary. So we kind of live in a waking hallucination. And, we have language as an intermediary. We have clothes as an intermediary. So this, this whole notion of what we think of as technology, could also be replaced by the word "medium." And I think there's a, even though media has this connotation of, you know, the news business and stuff like that, I think it's an awfully good word because it, because it gives us this notion of something being between us and direct experience. Interviewer: NOW, OF THOSE MEDIA, WRITING AND PRINTING OBVIOUSLY AS A MEDIA WE CAN ALL THINK ABOUT HAVE GREAT POWER, AND MUSIC AS WELL. WHAT DOES THAT MAINLY CONSIST OF?

Kay: Well, I think that, you know, the trade off with, with using any kind of intermediary is that any time you put something between you and direct experience you're alienating a bit. And, what you hope to get back from it is some kind of amplification. Various people who have studied evolution talk about the difference between the direct experience you get from kinesthetically touching something to the indirect experience you get of seeing it. And one is less involving than the other. The seeing is a good one because it means you don't have to test out every cliff by walking over it. And so there's an enormous survival value about stepping back. To have a brain that can plan and envision things that might happen, whether as images or even more strongly in terms of symbols, is of tremendous survival value because it allows you to try out many more alternatives. And so as the existentialists of this century have pointed out that the, we have gained our power over the world by, at the cost of alienating ourselves. So the thing that we-- Interviewer: ...A COMPUTER IS ANYTHING BUT A MACHINE IN A CERTAIN WAY. WHY SHOULD THAT BE A MEDIUM?

Kay: Well, I think, well, I think machine is a word that has a connotation that's unfortunate. Yeah, maybe what we should do is either elevate what we think of when you say machine, you know mechanism, kind of thing or maybe use a different word. To most scientists, machines are not a dirty word. Because we, one of the ways we think about things even in biology these days is that what we are is an immense, immensely complicated mechanism. That's not to say that it's predictable in the sense of free will because there's this notion now people are familiar with what's called chaotic systems, systems that are somewhat unstable. There's a lot of instability and stuff built in. It's a little more stochastic in a way, but the idea that there are things operating against each other and they make larger things and those things are part of larger things, and so forth. To most scientists it seems like a beautiful thing, it's not a derogatory term. Interviewer: I THINK IT'S ALSO THAT MOST MACHINES WERE BUILT TO DO USEFUL WORK, BUT THIS MACHINE IN PROCESSING INFORMATION HAS PROPERTIES WHICH ARE SORT OF LINGUISTIC AS WELL AS...

Kay: Yeah, well I think the connotation of machine is something that has to do with physical world. Most people who most people haven't learned mathematics, have never encountered the notion of an of an algorithm, which is a process for producing -- you could of your moving around physical things called symbols. I mean something physical is always being moved around, that's the way scientists look at the world. And it's the real question is is this a trivial example of it or is this an unbelievably interesting example of it? So flower is a very interesting example of a machine, and a lever is a somewhat trivial and like more understandable notion of a machine. Interviewer: NOW THESE MACHINES CAN MOVE AROUND SYMBOLS PRESUMABLY, LIKE THE ELECTRONIC VOLTAGES OR WHATEVER, SO FAST THAT THEY CAN DYNAMICALLY SIMULATE VIRTUALLY ANYTHING YOU WANT... Kay: Lots of things, yeah. One way to think of it is the, a lot of the what a computer is is markings. And just as you it doesn't matter whether you make the markings for a book on metal or paper or you make them in clay, all kinds of ways of making the equivalent of a book. The main thing about a marking is you have to have some way of distinguishing from other markings, and once you've got that then they can act as carriers of different kinds of descriptions. And the range of the markings that you can have in a book, and the range of markings you can have in a computer are the same.

Interviewer: SO LIKE IN A COMPUTER IT STARTED WITH PUNCH CARDS. Kay: Yeah, it doesn't matter what it is. You can make, there's a wonderful computer built out of Tinker Toy in the computer museum of Boston. It was done some MIT grad students. You can make it out of anything. And so that's one set of ideas, that is, the representational properties of things are very very much like a book, but then the computer has this even more wonderful thing, is that it's a book that can read and write itself. And moreover it can do it very quickly. So it has this self-reflexivity that you usually only find in biological systems. And it can carry through these descriptions very, very rapidly. Okay and then you get, then everything that's the big nirvana experience where you suddenly realize "Holy Smokes this thing is a pocket universe." And it has a nice complementary role to the way we deal with our physical universe as scientists. The physical universe especially in the nineteenth century was thought that it was put there by God and it was the job of scientist to uncover this glorious mechanism. So in the computer what you do is you start off with a theory, and the computer will bring that theory to life so you can have a theory of the universe that is different than our own, like it has an inverse cube law of gravity. And you can sit down and in not too much time you can program up a simulation of that and discover right away that you don't get orbits anymore with planets. And that's curious and a little more thinking and deeper delving will soon make you discover that only the inverse square law will actually give you orbits. Interviewer: THAT'S CURIOUS, BUT THE OTHER WAY YOU PUT IT ONCE IS YOU COULD INVENT A UNIVERSE WITH NO GRAVITY, RIGHT?

Kay: Yes, and in fact, I think, it's again the difference between a physical book which when, you can drop it and it will fall down to the floor, and the story that's in the book that could be about a place that has no gravity, like out in space. And the computer is at once a physical thing and the stories that it holds don't have to have anything to do with the physical universe its components reside in. So, in other words you can lie, both with books and with computers -- and it's important because if you couldn't lie you couldn't get into the future. Because we are living a world of things that were complete lies 500 years ago. Interviewer: NOW THE THING ABOUT THE COMPUTER AS WELL, APART FROM BEING DYNAMIC, IT'S ABILITY TO SIMULATE MEANS THAT IT CAN EMBODY ALL OTHER MEDIA. IT IS THE FIRST META-MEDIUM, ISN'T IT? Kay: Yes, yes, I called it the first meta-medium in the, in the early '70s when I was trying to explain what it was as being distinct from big mainframes with whirling tape drives, that... The physical media that we have like paper and the way it takes on markings are fairly easy to simulate. The higher the resolution display you get, the more you can make things look like a high-resolution television camera looking at paper. That was one of the things that we were interested in at at Xerox PARC was to do that kind of simulation. And there are deeper simulations than the simulation of physical media. There's the simulation of physical processes, as well. Interviewer: NOW, IF THEN -- THIS IS A PRETTY MIND-BLOWING, BECAUSE THE COMPUTER THEN IS NOT JUST IMPORTANT AS A VERSATILE TOOL, A NEW MACHINE, WHAT WE'RE TALKING ABOUT IS SOMETHING OF THE OF THE GRAVITY OF THE INVENTION OF WRITING OR PRINTING. IN POPULAR TERMS, WE'RE TALKING ABOUT SOMETHING REALLY PRETTY FANTASTIC.

Kay: Right. It's sort of sneaking its way in the way the book did. The the Catholic Church didn't, I think the Catholic Church thought that the book might be a way of getting more bibles written in Latin, and so at the by the time they started thinking about suppressing it, it was too late because of course, you could print all these other things, and all of a sudden you go from having 300 or 400 books in the Vatican Library that, in the year 1400 to something 100 or 150 years later where there are some 40,000 different books in circulation in Europe and 80 percent of the population could read them. And so all of a sudden you have something totally at odds with most ways of dealing with religion, which is multiple points of view. Interviewer: WHAT CAN WE LEARN, LOOKING AT THE HISTORY OF THE BOOK. CLEARLY IT TOOK A LOT LONGER TO HAPPEN, RIGHT, FOR ONE THING. BUT WHAT WERE THE STAGES IT HAD TO GO THROUGH TO GET TO THAT POINT? .

Kay: Well, I think in doing in doing these analogies, analogies can be suggestive, you know. And of course, the the amount that analogy carries through from one place to another depends on lots of things. I happen to like the analogy to the book, quite a bit because you have several stages, you have the invention of writing, which is a huge idea, incredibly important. And the difference between having it and not having it is enormous. The difference between having computers even if they're big, just big mainframes and not having them, is enormous. There're just things you cannot do without them. Then the next stage was the Gutenberg stage and Gutenberg just as McLuhan liked to say, "when a new medium comes along it imitates the old." So Gutenberg's books were the same size as these beautiful illuminated manuscripts that were done by hand. And big like this and in the libraries of the day, back then the books generally weren't shelved. There were so few books in a given library that they were actually had their own reading table. And if you look at woodcuts of the day. It looks for all the world like a time-sharing bullpen. You know, you go into the library, there's one library in Florence that's set up that way. You go over to the table that the particular book is at, they are chained in because they were too valuable, they were priceless. And Gutenberg imitated that, but of course he could produce many more books. But he didn't know what size they should be, and it was some decades later that, Aldus Manutius was a Venetian publisher decided that books should be this size. He decided they should be this size because that was the size that saddle bags were in Venice in the late 1400s. And the key idea that he had was that books could now be lost. And because books could now be lost, they could now be taken with you. And they couldn't be like this, they had to be something that was a portable size. And I think in very important respects where we are today is before Aldus. Because this notion that a computer can be lost is not one that we like yet. You know, we protect our computers. We bolt them to the desk and so forth. Still quite expensive. They'll be really valuable when we can lose them. Interviewer: THE OTHER POINT ABOUT THE POINT OF LITERACY, IS ALSO WELL TAKEN THERE. WHILE THERE ARE VERY, WHILE THERE ARE NO BOOKS TO READ I ASSUME THERE'S NO POINT IN IT...

Kay: Right, well if reading and writing is a profession you don't have the notion of literacy and illiteracy. Like, there's no word in our language that stands for "il-medicine." There is medicine, which is practiced by professionals, and there is no notion of il-medicine. Now if we all learned medicine, if that were part of, and it may be some day that staying healthy might be something that becomes an important part of everyone's life then there'll be a notion of medicine and il-medicine. And so it wasn't until there was a potential for the masses to read that we had the notion of literacy and illiteracy. And to me literacy has three major parts that you have to contend with. One is you have to have a skill to access material prepared for you by somebody else, regardless of what it is that you're you're trying to be literate in. In print mediums it's called reading, reading/accessing. I don't think many people in our society would think that a person who just could read was literate. Because you have to be able to create ideas and put them into the information stream, so you have creative literacy, which is the equivalent of writing. And then finally after a medium has been around for awhile you have the problem that there are different genre of these things so that you have to go into a different gear to read a Shakespearian play. Now there's a different way of doing it. The way they wrote essays 200 years ago is different than the way they write essays today, and so you have to have some sort of literacy in the different styles for representing things. And so I think those three things when something new comes along, since we have we're talking about media, we could talk about "mediacy" and "il-mediacy," or "computeracy" and "il-computeracy," or something like that. And I think each one of those things is a problem that has to be solved. There's the problem of how can you, when you get something new made for a computer, be able to do the equivalent of read it - of access what it is. You go to a computer store and get some new wonderful thing, slap it into your computer and if you have to learn a new language in order to use the thing, then things are not set up for you being computer literate. Interviewer: SO WRITING IN LATIN WOULD BE ANALOGOUS TO RATHER DIFFICULT...

Kay: Yeah I think it has a lot to do with that, and again to sort of strain the analogy we're making with with printing my other hero back then was Martin Luther who a few years later had this intense desire to let people have a personal relationship with God without going through the intermediaries of the of the church. And he wanted people to be able to read the bible in their own language and knew that German which was a bunch of different regional dialects back then, mostly for talking about farming and stuff was not going to be able to carry the weight of the bible, as it was portrayed in Latin which is a much more robust and mature language. So Martin Luther had to invent the language we today call High German, before he could translate the bible into it and so the language that Germans speak today is is in a very large part the invention of a single person who did it as a user interface, so that he could bring the media much closer to the people, rather trying to get everybody in Germany to learn Latin. Interviewer: NOW WITH THAT THAT SORT OF INSIGHT, WE COULD THINK OF THIS WHOLE PROCESS LITERALLY TOOK A LONG TIME TOOK MANY CENTURIES AND WE CAN YOU SAY, THE EARLY PHASES OF THE COMPUTER HAVE GONE QUITE MUCH MORE RAPIDLY HAVEN'T THEY?

Kay: Right, I think, well I think we're part you know, things are going more rapidly. But also to the extent that analogies help, you know, certainly that progression, I happen to know, be a big fan of books, and I happen to know that progression of of illuminated manuscripts which I absolutely adored when I was a child. The Gutenberg story, the Aldus story; he was one of my great heroes, because he was the guy who decided he had to read more than one book. And then Martin Luther. Those immediately sprang to mind as soon as I had decided that the computer wasn't a car anymore. And then, then the thing becomes the two most powerful things that you have to do right away. You don't have to wait to gradually figure them out. You have to get the thing small and you have to find a language that will allow people, that will bring what it is closer to the people rather than the other way around. Interviewer: NOW AN OPPORTUNITY CAME FOR YOU, I KNOW YOU WERE GOING TO MAKE YOUR DYNABOOK ONE WAY OR ANOTHER, BUT AN OPPORTUNITY CAME FOR YOU TO GET TO A BRAND NEW CENTER WHEN YOU WENT TO XEROX PARC.

Kay: Yeah, I thought up the Dynabook in 1968 and made a cardboard model of it, and a couple of years later I was about to go to Carnegie Mellon University to do the thing, and I had been consulting a little bit for the newly formed Xerox PARC, and it just got to be too much fun, so I never wound up going to Pittsburgh. Interviewer: WHAT WAS XEROX PARC? Kay: Xerox PARC was an attempt by Xerox to spend part of its research and development money on really far out stuff that was not going to be particularly controlled by them. In the late '60s they bought themselves a computer company, they bought a whole bunch of publishers. They were trying to get into a larger sphere than just office copiers. The the one of the ringing phrases of the late '60s was "Xerox should be the architects of information." And so they had this expansive feeling about getting into other areas, and they got Jack Goldman in, who was a scientist at Ford and as the chief new chief scientist at Xerox and Jack realized that what we actually had to have at Xerox was something like a long range research center. Xerox was a big company even then, and what Jack decided to do was to hire George Paik who was the chancellor of Washington University in St. Louis and George was quite familiar with ARPA and so he hired Bob Taylor who had been one of the funders of ARPA in the '60s. Taylor was one of the champions of Engelbart, one of the champions of the work at RAND and so forth. What Taylor did was to, instead of hiring random good scientists, what he did was to decide to set up a miniature concentrated ARPA community. So he went out after all of the people he thought were the best in the ARPA research community and those people were somewhat interested because the Mansfield Amendment was shutting down, constricting the kinds of far out things that ARPA could do, so it was one of those things where the.. Interviewer: WHAT WAS THE MANSFIELD AMENDMENT?

Kay: The Mansfield Amendment was an overreaction to the Vietnam War. A lot of people were worried about various kinds of government and especially military funding on campus and secret funding and all of this stuff. And the the ARPA information processing techniques projects got swept up in this even though every single one of them was public domain and had already been spun off into dozens of companies including DEC. And so this is this is just one of these broad brush things done by Congress that happened to kill off one of the best things that's ever been set up in this country. And so a lot of smart people in universities who believed more or less the same dream about the destiny of computers were gathered up by Taylor, and I was one of those. Interviewer: SO HOW GOOD WERE THEY -- YOU WERE TALKING ABOUT THE PEOPLE THAT WERE GATHERED, HOW GOOD WERE THEY? Kay: Well, I thought they were the best.

Interviewer: OKAY. HOW GOOD WERE THEY, ALAN? Kay: I thought the people that were there were among the very best. In my personal pick, by 1976 which was about six years after the place was started there were 58 out of my personal pick of the top 100 in the world. And divided into a couple of labs. People who had very strong opinions who normally would not work with each other at all, in any normal situation, were welded together by having known each other in the past, having come up through the ARPA community believing more or less the same things, of course, when you have that kind of level of people, the disagreements in the in the tenth decimal place become significant. But it worked out very well because we were able to get together completely on joint hardware projects, so we built all of our own hardware as well as all of our own software there. And the hardware was shared amongst the many different kinds of groups that were there, so there was one set of hardware, as we moved on from year to year and then many different kinds of software design. Interviewer: AS XEROX HAD THIS IDEA OF ARCHITECTURE OF INFORMATION, A RATHER GENERAL CONCEPT, WHAT DID YOU GUYS THINK YOU WERE DOING?

Kay: Well, the the psychology of that kind of person is, would be thought as being arrogant in most situations. We we thought we knew we didn't know exactly what we were going to do, but we were quite sure that nobody else could do it better, whatever it was. And the architecture of information, that was a phrase that sounded good, it didn't actually mean anything. And there was some mumbling also about if paper goes away in the office in the next 25 years, Xerox should be the company to do it. Because that was their heartland business, but Taylor had was setting the place up to realize the ARPA dream. Which was to do man-computer symbiosis in all of the different directions that ARPA had been interested in the past which included interactive computing, which included artificial intelligence and the notion of agents. So there're projects that range from being intensely practical ones, like Gary Starkweather doing the first laser printer. Metcalf and other people doing the Ethernet the first packet switching local area net. Thacker being the main designer of the Alto which was the first work station and the first computer to look like the Macintosh. I was head of the group that was interested in the Dynabook design and a lot of the things that we came up with for the Dynabook were simulated on the Alto and became part of the first notion of what work stations and personal computers should be like. And those included things like the icons and the multiple overlapping windows and the whole, the whole paraphernalia that you see on machines like the Macintosh today. Interviewer: THE BASIC IDEA WAS, THE SORT OF MACHINE YOU WERE INTERESTED IN WAS ONE WHICH HAD THE CAPACITY TO HANDLE RICH GRAPHICAL INTERFACES, BUT COULD BE NETWORKED TOGETHER. SO IT HAD TO HAVE MORE STAND ALONE POWER THAN NORMAL TIME-SHARING, IS THAT TRUE?

Kay: Yeah, in fact one of the things we were incredibly interested in was how powerful did it have to be. We had we used phrases like we didn't know how powerful it had to be, but it had to be able to something like 90 or 95 percent of your, the things that you would generally like to do before you wanted to go to something more -- of course, we didn't know what that meant. We thought it would be somewhere around ten MIPS (million instructions per second), that was sort of the rule of thumb. The machine we built was effectively some, it was somewhere between one and three MIPS, depending on how you measured it. And so it was less powerful than we thought. On the other hand it was about 50 times as powerful as a time-sharing terminal. So the magnification of what we could aspire to was enormous. And the machine was not done blindly. It was done after about a year of studying things like fonts, and what happens when the eye looks at little dots on screens, and what animation means, and we did a bunch of real time music very early synthesis kinds of stuff and so we knew a lot about what the machine should be and how fast it should run. Interviewer: SO TO DESIGN THIS MACHINE AND THE SOFTWARE THAT WENT WITH IT YOU STUDIED HUMAN BEINGS?

Kay: Yeah. A lot of us did. I mean what was nice here is I was one I was one of the drivers on one of these small machines. There were other groups at this time in 1971 when PARC was forming who were building a large time-sharing system to act as a as a kind of an institutional resource for PARC. There were lots of different things going on and I wanted to do stuff with children because my feeling after doing the FLEX machine was that we, and I especially, were not very good at designing systems for adults. And so then I thought, well children have to use this thing, why don't we forget about adults for a while, we'll just design a tool for children, a medium for children, and we'll see what happens. So I desperately wanted to get a bunch of these systems. I needed like fifteen of them. I needed to have them in a school somehow, with children, Because I wanted to see how children reacted to these things, and improve the design that way. Interviewer: WE'LL KEEP GOING DON'T WORRY ABOUT IT. SO THE IDEA WAS THAT WHEN YOU USED CHILDREN IT THREW INTO FOCUS MANY OF THE PROBLEMS YOU HAD BEFORE?

Kay: Yeah. I don't know that it threw into -- the shock when you go out to real users of any kind is enormous. Because technical people live in this tiny little world actually. We like to think it's a big world, but it's actually a tiny little world. And it's full of phrases that we learned when we were taking math classes, and it's, it's hermetic. And it's full of people who like to learn complicated things, they delight in it. And so one of the one of the hardest things is for these kind of people to do any kind of user interface for people who are not like that at all. That is the number one dictum of user interface design, is the users are not like us. And so what you need to have is some way of constantly shocking yourself into realizing that the users are not like us. And children do it really well, because they don't care about the same kinds of things that adults do, you know, they're not like this, they're not like that, they can always go out and play ball if, they haven't learned to feel guilty about not working yet. And the last thing you want to do is subject the children to more school. You don't want to put them through a training course to learn a computing system. So we use the term forcing function. It was a setup where in many ways it's harder to design a system for children than it is for adults, along a couple of couple of avenues. And it forced us to start thinking about how human mentalities might work. Interviewer: THESE WERE YOUR SMALLTALK KIDS, RIGHT?

Kay: These eventually became the Smalltalk kids. And the lead into that was that Seymour Papert had gotten a lot of good ideas from having worked with Piaget. And Piaget had a notion of the evolution of mentality in early childhood as being sort of like a a caterpillar into a butterfly. And the most important idea of it was that each stage of development, that the kid was not a deficient adult. That it was a fully functioning organism. Just didn't think about the world quite the same way as adults did. And other psychologists like Jerome Bruner had similar ideas, also inspired by by Piaget. Interviewer: WHAT WERE THESE BASIC STAGES? Kay: Well, if you break them down into just three stages, one in which a lot of thinking is done just by grabbing. A hole is to dig it, an object is to grab it. And then the next stage is very visual, in which many of the judgments that you make about the world are dominated by the way things look, so in this stage you have the Piaget water pouring experiment, you know you pour from a squat glass into a tall thin one and the kid in this stage says there's more water in the tall thin one. He doesn't have an underlying notion, a symbolic notion, that quantities are conserved. He doesn't have what Piaget calls "conservation." And conservation is not a visual idea. Visual is what does it look like when you compare it. And then later on you get a stage that Piaget called the "symbolic stage" where facts and logic start to dominate the ways thinking, and a child in that stage knows as a fact that water doesn't appear and disappear. Nor does anything else. And so it's able to give you judgments or inferences based on these facts rather than by the way things appear. Now Bruner did a lot of these experiences over again and added some sort of side trips into them. So one of the things he did in the water pouring experiment was to intersperse a cardboard after the child had said there's more water in the tall thin glass, and the kid immediately changed what he had just said, he said, "Oh no, there can't be more because where would it come from." And Bruner took the card away, and the kid changed back, he said, "No, look, there is more." Bruner put the card back and the kid said, "No, no there can't be more, where would it come from?" And so if you have any six year olds you'd like to torment, you know this is a pretty good way of doing it. And Bruner the way interpreted this is that we actually have separate ways of knowing about the world. One of them is kinesthetic, one of them is visual, one of them is symbolic, and of course we have other ways as well. And that these ways are in parallel. They really constitute separate mentalities. And because of their evolutionary history they are both rather distinct in the way that they deal with the world, and they are not terribly well integrated with each other. So you can get some remarkable results by telling people an English sentence which they'll accept and then showing them the picture that represents the same thing and have an enormous emotional reaction. Interviewer: HOW WAS THIS GOING TO HELP YOUR INTERFACE DESIGN?

Kay: Well, you know we were clutching at straws! You'll do anything, when you don't know what to do. But it seemed like a reasonable thing to since we're going to be dealing with humans, it seemed like a reasonable thing to try and get something out of the newly formed cognitive of psychology to do it, and the thing that got me. The Piaget stuff didn't help me much. Because one of the ways of interpreting it is that for example, you shouldn't probably teach first graders arithmetic because they aren't quite set up to receive it yet. You'd be better off teaching them something that's more kinesthetic like geometry or topology. They're just, they're learning, they're tying their shoes and stuff and there are a whole bunch of things you can do with knots and things that are that are, higher mathematics. But the particular symbolic manipulations of arithmetic are not easy for them at that stage. Well, that's that's important when you're designing curriculum in schools but it didn't tell us much about a user interface. The Bruner thing though was really exciting. Because one of the ways of interpreting it is that at every age in a person's life there are these multiple mentalities there. Now when your kid is six years old, the symbolic one may not be functioning very much, but there are tricks that you can get it to function. And Bruner had this notion that you could take an idea that's normally only taken, taught symbolically like some physical principle, and teach it to a six year-old kinesthetically, and to a ten year-old visually. And later on to a fifteen year-old you can teach it symbolically. This notion of a spiral. And Pappert had the same idea. If you want to learn how a gyroscope works, the last thing you want to do is look at the equation. What you want to do is do a little bit of visual reasoning about the thing and then have one in your hand in the form of a big bicycle wheel, and when you turn that bicycle wheel and feel what it does, it clicks together with a small amount of reasoning you are doing and all of a sudden you understand why it it has to flop the other way. But there's nothing that mysterious about it, but from the outside and looking at the equation, you know and thinking about cross products and stuff like that it just doesn't make any sense. So Bruner's idea was when you don't know what else to do in developing a curriculum, you try recapitulating the Piagetian stages, cast this adult level knowledge down into a kinesthetic form and recast it later on. Teach it over and over again, recast it later on in a visual form. And he thought of the these lower mentalities kinesthetically and iconic mentalities as being what we call intuition. They can't talk much but they have their own figurative ways of knowing about the world that supply a nice warm way of thinking about physical problems. Interviewer: ENGLEBART HAD SOMETHING...ALREADY THE MOUSE IS A KINESTHETIC...

Kay: Yes, but not a good one. It's okay. But if you only use it to point on the screen it's not very, it's not very kinesthetic. Because major operation in kinesthesia is grabbing something and moving it. And Engelbart did not have that. And that was one of the first, of course people been moving things around in in computer graphics and most people that had ever done this liked it better. The first little paper I wrote about the Dynabook talked about moving things around and GRAIL moved things around. Sketchpad moves things around. It's a natural thing when you're do when you're thinking about things from a graphic center, which Engiebart wasn't even though he had little bit of graphics he was thinking about it from text. Think about things from a graphic center. It's natural to want to just move something around. And so the connection with what had already been done, with what Bruner was saying got us thinking a little bit more strongly about what the mouse was supposed to be about. The mouse was not just for indicating things on the screen, it was to give you a kinesthetic entry into this world. Now the important thing about it is that the I don't know how all scientific discoveries are made. They usually are connected together with little flashes of insight over long periods of time, but in fact the we're when I started off I was quite sure that Bruner had the key and we got the ingredients rather early for doing the user interface. But it was remarkable to me how many people we had to try ideas out on for years. Three or four years we had to not only build the system but in fact the early versions of the system were not as good as later versions of the system, and actually took about five years and hundred, dealing with hundreds of people to come out with the first thing that was sort of looked like the Mac. Interviewer: AND THIS IS THE DESKTOP...

Kay: Yeah, the desktop was actually an idea we didn't like particularly. That was something that was developed as a -- when Xerox was thinking specifically of going at offices. Interviewer: THIS WAS THE ICONS, WINDOWS... Kay: Yeah, in the in the Dynabook idea, see when you have a Dynabook and you have simulations and multidimensional things as your major way of storing knowledge, the last thing you want to do is print any of it out. Because you are destroying the multidimensionality and you can't run them. So if you have, so one of the rules is if you have anything interesting in your computer, you don't want to print it. And that's why if your computer is always with you you don't want to, you won't print it. When you give it to somebody else what you're sending to them is something that was in your Dynabook to their Dynabook. Okay, and the user interface that we worked on was very much aimed at that, and so it had a different notion than the than the desktop was sort of a smaller version of it. What we had was this, you can think of it as multiple desks if you want, but we had this notion that people wanted to work on projects, and I especially liked to work on multiple projects and people used to accuse me of abandoning my desk. You know it got piled up and I'd go to another one and pile it up. And when I'm working on multiple things I like to have a table full of all the crap that goes there and another table and so forth, so I can everything is in the state it was when I last left it. And that was user interface that we wound up doing where you could move from one project to another project and you could think of it on, if you did it on the Macintosh you could think of it as having multiple Macintosh desks, each one of which was all the tools for that particular project. Interviewer: THE REASON WHY THESE, YOU USED THE TERM INTUITIVE BEFORE, OR WE FEEL THINGS THAT USE OUR KINESTHETIC AND ICONIC MENTALITIES ARE INTUITIVE. ALSO THE TERM LEVERAGE IS USED. CAN YOU TELL ME WHAT YOU MEAN WHEN YOU SAY, WHEN YOU SUCCEED, WHEN YOU CONFER LEVERAGE ON A USER?

Kay: Yeah, well, I think -- I mean, another part of my background before I was heavily into computer science, was theater. And if you think about it, theater is kind of an absurd setup, because you have perhaps a balding person in his forties impersonating a teenage in triumph, holding a skull in front of cardboard scenery, right? It can't possibly work. But in fact it does all, all the time. And the reason it does is that theater, the theatrical experience is a mirror that blasts the audience's intelligence back out at them. So it's a as somebody once said, "People don't come to the theater to forget, they come tingling to remember." And so what you -- what it is is an evoker. And again, having the thing go from an automobile to a medium started me thinking about user interface as a theatrical notion. And the -- what you do in the theater is to take something that is impossibly complex, like human existence or something, and present it in something that is more like stereotypes, which the user can then resynthesize human existence out of the, the -- I think it was Picasso who said the, that art is not the truth, art is a lie that tells the truth. And so I started thinking about the user interface as something that was a lie, namely, what it really isn't what's going on inside the computer, but it nonetheless tells the truth because it evokes, it's a different kind of computer. It evokes what the computer can do in the user's mind and that's what you're trying to do. So that's the computer, they wind up going. And, and that's what I think of as leverage. Interviewer: SO JUST AS THE TIME-SHARING, YOU SORT OF HAD THE ILLUSION THAT YOU HAD EXCLUSIVE USE OF THE COMPUTER. HERE, YOU HAVE A DIFFERENT ILLUSION THAT YOU'RE MOVING THINGS ON, THAT THERE ARE OBJECTS ON YOUR SCREEN.

Kay: Yes. In, in fact, we use the term user illusion. I don't think we ever used the term user interface, or at least certainly not in the early days. And we did not use the term metaphor, like as in desktop metaphor. That was something that I think came along later. The idea was what, what can you do theatrically that will cover the amount of magic that you want the person to be able to experience. Because we again the understanding was in the theater, you don't have to present the world as physicists see it, but if you're going to have magic in Act III, you better foreshadow it in Act I, so there's this whole notion of how do you dress the set, in order to set up expectations that will later turn out to be true in the, in the audience's mind. Interviewer: NOW WHILE YOU WERE WORKING ON THESE THINGS, OTHER PEOPLE AT PARC WERE BUILDING A MACHINE? Kay: They were building various machines. And it was a -- it's kind of funny, because in the way it worked out is I had come up with a, took Wes Clark's LINC design and came up with a version, sort of a modern version of that in a little handheld, you know, sort of a like a suitcase, that I called MiniCOM, and has done some design of it, how things would run on it, and the problem was it wasn't powerful enough. But it was something I figured I could get 15 or 20 of for the experiments that we wanted, and I had designed the first version of Smalltalk for this machine. And much to my amazement I got turned down flat by a nameless Xerox executive. And I was crushed that, you know, no, we're doing time-sharing ... sorry.

Interviewer: WE'LL START AGAIN...JUST GO FROM WHERE YOU SAID YOU WERE TURNED DOWN BY A XEROX EXECUTIVE. Kay: Okay, so ... there was a I couldn't believe that a, this, an unnamed, a nameless Xerox executive turned me down flat as far as the idea of building a bunch of these things. And I was crushed. You know, I was only, I was 31 years old then, and, just, ach, it was terrible. And so then I evolved another scheme of getting a bunch of little minicomputers, like Data General Novas, and I could only afford five of those -- I had something like $230,000 or so, and I figured five of those, and take the system they were experimenting with fonts and bitmap displays on and I'll replicate that, I'll get five of them -- it's an incredibly expensive way to do it, but that would give me five machines to do stuff. And then this this Xerox executive went away on a, on a task force, and Chuck Thacker and Butler Lampson came over to me in September of 1972 and said to me, "Have you got any money?" And I said, "Well, I've got about $230,000." And they said, "Well, how would you like us to build you your machine?" And I said, "I'd like it fine." And so what I gave them was the results of the experiments I had done in fonts and bitmap graphics, plus we had done some prototyping of animations and music. So to do all these things that had to be so and so fast. And what happened there was a combination of my interest in a very small machine and Butler Lampson's interest in a $500 PDP-10 someday. And Chuck Thacker wanted to build a machine 10 times as fast as a Data General Nova. And Chuck was the hardware genius, so he got to decide what it was in the end, and he went off and in 3 1/2 months with two technicians completely built the first machine that had the bitmap display. And he built it not as small as I wanted it, but ten times faster than I had planned, and it was a larger capacity machine. So it was simultaneously both the first thing that was like a Mac, and the first work station. Interviewer: THIS WAS THE ALTO.

Kay: An absolute triumph. Yeah, this was the Alto. Interviewer: WHAT WAS THE BITMAP DISPLAY? WHY WAS THAT SO . . . ? Kay: Well, it was -- I mean that was fairly controversial, because the, the problem is that bits were expensive back then. And before about 1971, bits were intolerably expensive, because they're, in memory, they're in forms of these little magnetic cores, and just even a modest memory was many cubic feet, and required expensive read sense, amplifiers and power supply -- it was a mess. And in the early '70s, Intel came out with a chip called the 1103, that had a thousand bits on it. Right? The chips you buy today have like 4 million bits, and the Japanese are making ones with 16 million -- 64 million bits. So this was a thousand bits on a chip. This is the best thing that ever happened. Because all of a sudden, everything changed. This is one of these predictions that Bob Noyce and Gordon Moore, that it completely changed the relationship of us to computing hardware. And the people who wanted to build a big time-sharing machine at PARC, including Chuck Thacker and Butler Lampson, immediately started building one out of this memory. And it was also the right kind of thing to build the Alto out of. But we still couldn't have a lot of it. This was 1972 that we're talking about. And so this notion -- bitmap display is something that we didn't invent. People had talked about it, there even were small versions of it. Television -- Interviewer: EACH PART --

Kay: Television is something that is discrete in one dimension and analog in another dimension but you can think of it as being 300,000 separate pixels on a screen, and it's something everybody would like, because it's perfectly general. The nicest thing about television is that you don't have to determine beforehand what kind of picture you're going to show on it. You can show anything. You don't have to worry. Whereas every other kind of display, you had to have some notion about what you were going to display in order to know what kind, how much power you should build into the display. So we spent a year and a half or so trying different kinds of displays and making calculations and stuff, and at some point we realized, oh, the heck with it, you know, we just have to go for a bitmap display, and of course, we didn't know that people would settle for tiny little displays like this, you know, so the first display on the Alto actually was about two and a half times the size of the first Mac. It was about 500,000 pixels and it was an 8 1/2 x 11 page display, the exact size that the Dynabook was supposed to be- Interviewer: IS IT TRUE YOU PROGRAMMED THE COOKIE MONSTER? IS THAT TRUE?

Kay: The Cookie Monster the first I invented the first painting program in the summer of 1972 and it was programmed up by Steve Purcell, who was one of our students, and again the idea was to get away from having just crisp computer graphics, as they had been in the past, because the idea was that people don't design on computers, and one of the reasons they don't design on computers is they can't make things messy enough. And my friend Nick, Nicholas Negroponte at MIT was also thinking similar thoughts. He wanted people to be able to sketch as well as to render. And so I invented the, the painting program. The painting program was a direct result of thinking seriously about bitmap displays. It, it's maybe the first thing you think of. Because you are always painting on it, when you're putting up characters, you're painting characters. And it was very much like MacPaint, it turned out to be many years later. I have a nice video showing it being used. And the second thing I thought of was that having a bitmap display with a, combined with multiple windows like the FLEX machine had would automatically allow us to do this overlapping idea, which we all thought was terribly important, because the, we thought this 8-1/2 x 11 display was impossibly small for all the stuff we wanted to put on, and the overlapping windows would give you a way of having much more effective screen space, as you, as you move things around. Interviewer: NOW THIS MACHINE, THE ALTO, THE PROTOTYPE WAS PRODUCED IN 1973. HOW DOES IT COMPARE IN TERMS OF WHAT IT'S GOT, COMPARED WITH THE MACINTOSHES AND THE MACHINES WE HAVE NOW.